Comprehensive Product Lifecycle Management for Software Development for LLM-Based Products

The current buzz around generative AI is transforming industries across the globe, with its potential to revolutionize user experiences and enhance productivity in unprecedented ways. Large Language Models (LLMs) sit at the forefront of this transformation, offering solutions to a variety of business challenges. From automating customer interactions to generating personalized content, the possibilities are endless. However, successfully developing and deploying LLM-based products requires more than just excitement about AI’s potential—it demands a structured approach to product lifecycle management for software development for LLM-based products.

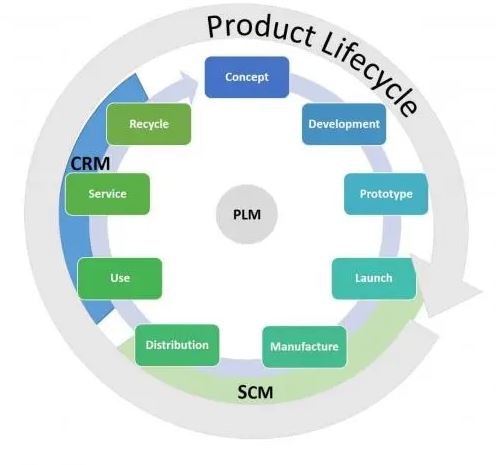

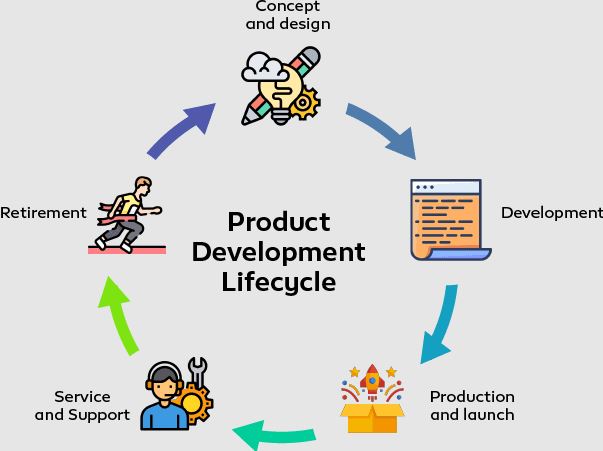

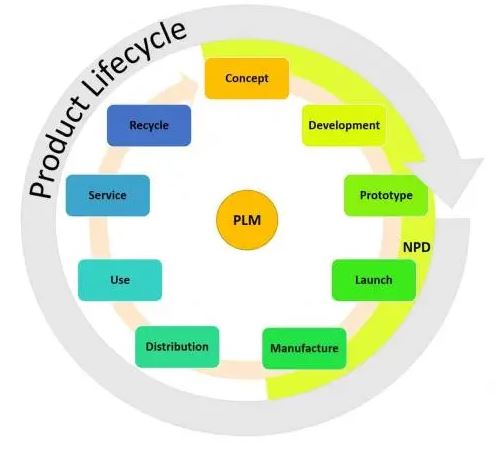

Product lifecycle management (PLM) is crucial for navigating the complexities of LLM product development. This strategic process involves multiple stages, from planning to deployment and monitoring, ensuring that every step is meticulously executed for optimal results. AI21 Labs, with its extensive experience in developing LLM solutions for top enterprises like Ubisoft, Clarivate, and Carrefour, has honed its ability to generate predictable outcomes and revenue for clients through comprehensive PLM strategies.

For companies aiming to integrate LLM-based products into their workflows, following a structured product lifecycle management approach is essential. This includes identifying key stakeholders, gathering the necessary data, and customizing models to fit specific use cases. By leveraging a strategic PLM approach in software development for LLM-based products, companies can reduce development risks, improve performance, and maintain a competitive edge in the rapidly evolving generative AI landscape.

In this guide, we will break down the key stages of product lifecycle management for LLM software development, exploring how businesses can effectively plan, build, deploy, and monitor these innovative AI solutions to meet their goals and drive growth.

Section 1: Preparation – Planning Your LLM Development Project

Objective: Define the goals and setup the strategic plan for the LLM project

The first and most critical step in developing an LLM-based product is setting clear goals and creating a strategic plan. This preparation phase involves understanding the specific needs of the business and defining the product’s purpose. This stage ensures that the project stays focused, meets objectives, and has a roadmap to guide its progression. The goal is to map out the expected outcomes, outline how the LLM will deliver value, and detail the steps required to build a successful product. Whether you are working internally or in collaboration with an external LLM provider, meticulous planning is key.

Stakeholder Identification

For any LLM project to succeed, it is essential to identify key stakeholders who will influence decision-making and implementation. These stakeholders typically include:

- CEOs and CTOs: Responsible for high-level strategic decisions and ensuring the alignment of the project with broader company goals.

- Product Managers: These individuals act as a bridge between the business and technical teams, ensuring the product aligns with both business needs and technological feasibility.

- Data Scientists, Engineers, and Designers: Their technical expertise is crucial in building and fine-tuning the LLM product.

- Legal and Compliance Teams: Responsible for ensuring the product complies with data privacy and security regulations.

Resource Collection

Gathering and organizing the necessary resources is a crucial step in the preparation process. This includes collecting data, which serves as the backbone for training and fine-tuning the LLM. For instance, an ecommerce company developing an LLM-based product description generator would need to gather product details, style guides, and any existing product descriptions. These data sets are essential for creating accurate and brand-consistent descriptions. At this stage, it’s vital to identify any potential limitations, such as data accessibility, and to find solutions early on. Overcoming these challenges early ensures that the project remains on track and that sensitive data is handled appropriately.

Legal and Compliance

Involving legal and compliance teams early in the project is necessary to address privacy concerns and ensure that all data collection and usage practices adhere to legal regulations. This is particularly important for industries dealing with sensitive data, such as healthcare or finance. Early involvement of these teams can help mitigate risks, prevent legal issues, and streamline the project’s development.

Section 2: Building the Product

Objective: Transition from planning to building the LLM model

After completing the preparation phase, it’s time to move on to building the product. This phase involves implementing the strategic plan and ensuring that the LLM model is correctly configured to meet the desired outcomes.

Task 1 – Choosing a Language Model

One of the first technical decisions involves selecting the right language model for the project. Several factors should be considered when choosing a model, including:

- Cost: Some models are more expensive to run due to their size and complexity.

- Performance: The model’s ability to handle specific tasks, such as processing long text inputs or generating human-like responses.

- Use Case Complexity: Choosing a model that fits the technical and practical requirements of the project.

Task 2 – User Flow and Wireframes

The next step is designing the user interface and determining how users will interact with the product. This includes defining the inputs the user will provide, the format of these inputs, and the outputs the model will generate. Detailed wireframes help streamline development by clearly outlining the expected flow and user experience, saving time during the build.

Task 3 – Data Curation

Data curation involves collecting, cleaning, and preparing the data that will be used to train and test the model. This step is critical in ensuring that the model can handle the variety of inputs it will encounter in real-world scenarios. Data should be carefully curated to represent a range of possible inputs and outputs, ensuring the model learns from diverse examples.

Task 4 – Model Training and Prompt Engineering

Training the LLM involves customizing it to fit the project’s specific needs. There are two common approaches:

- Training the Model: Feeding the LLM examples of inputs and expected outputs to teach it the intricacies of a particular task.

- Prompt Engineering: Crafting specific input queries or instructions that guide the model’s responses to produce desired results.

Both methods can be combined to optimize performance, allowing the model to generate highly relevant and accurate responses based on user input.

Task 5 – Model Adjustments and Evaluation

Once the model is trained, it’s time to fine-tune its performance by adjusting parameters. This step optimizes the model’s ability to produce high-quality, human-like responses. After tuning, the model must be evaluated against predefined benchmarks to ensure it meets the project’s performance requirements. Evaluation helps identify areas where the model excels and areas where further adjustments may be needed.

Task 6 – Pre-processing and Post-processing

Pre-processing involves cleaning and formatting input data so that the model can process it effectively. Post-processing ensures that the output generated by the LLM is polished, accurate, and aligned with the company’s brand voice. These steps are critical for maintaining a high-quality product that meets the user’s needs.

Example: Structuring Prompts for Scalable Product Descriptions

Consider an ecommerce company developing a product description generator. Structuring the input prompt is key to ensuring the LLM generates accurate and brand-consistent descriptions. A scalable approach might involve a three-part prompt structure: constant brand guidelines, variable product details, and consistent instructions. This modular structure allows businesses to generate unique product descriptions at scale while maintaining a consistent tone and style.

By using a structured and scalable prompt system, companies can streamline product description generation and ensure high-quality output that resonates with their audience.

Section 3: Model Deployment

Objective: Integrate and deploy the LLM model into the real-world environment

Once the LLM model has been built, trained, and refined, the next crucial step is deploying it into the intended real-world environment. This phase marks the transition from development to actual usage, allowing the model to begin performing tasks as designed. The goal is to make the LLM model accessible to end-users in a seamless and efficient manner, ensuring that it meets the product’s functional requirements.

Deployment Methods

There are several deployment methods available, and the choice depends on the technical infrastructure of the company and the specific requirements of the product. Common methods include:

- API Integration: One of the most straightforward ways to deploy an LLM is through an API. The API acts as a bridge between the platform and the LLM, enabling easy transmission of input queries and retrieval of responses. This method is popular because it allows for real-time integration without the need for hosting the model on internal servers.

- SDKs (Software Development Kits): SDKs offer developers pre-built tools and libraries to interact with the LLM, simplifying integration. This method is often favored by companies that want to maintain more control over how the model is integrated with their system.

- Cloud Servers: Deploying an LLM via cloud services such as Amazon SageMaker, Amazon Bedrock, or Google Cloud Platform offers scalability and ease of access. Cloud deployment is ideal for organizations with fluctuating workloads, as it allows the model to scale up or down based on demand.

Regardless of the method, it’s critical to ensure that the deployment environment aligns with the project’s needs, including security, performance, and cost management.

Quality Assurance

Before going live, it is essential to conduct rigorous quality assurance (QA) and testing to ensure that the model performs reliably under various conditions. QA testing helps identify and resolve issues related to model behavior, response time, and interaction with other system components. During this phase, any bugs or inconsistencies should be addressed, ensuring that the model can handle real-world inputs and deliver accurate outputs consistently.

A final review should also include performance benchmarks to verify that the model meets the initial goals outlined during the preparation phase.

Section 4: Monitoring Results

Objective: Analyze the performance and impact of the deployed LLM product

Once the LLM product is deployed and functioning, the next step is to monitor its performance and evaluate the impact it has on business operations. This phase is critical for ensuring that the product is delivering the desired outcomes, both in terms of technical performance and business value.

Performance Metrics

Tracking performance metrics is essential to understanding how well the LLM is functioning. These metrics can include:

- Latency: The time it takes for the model to process inputs and generate outputs.

- Accuracy: How closely the model’s outputs match the expected results or user needs.

- Error Rates: Tracking any failures or inconsistencies in the model’s outputs.

- Scalability: How well the model performs under increasing loads or higher volumes of input.

Monitoring these metrics provides insights into the technical health of the model and can guide further improvements or adjustments.

User Feedback and Engagement

Another important aspect of monitoring is gathering user feedback. User engagement data helps companies understand how the end-users are interacting with the product, what features are most valuable to them, and whether the product is meeting their expectations. Feedback can be collected through user surveys, in-app analytics, or customer support channels.

For example, in the case of an ecommerce company using an LLM-powered product description generator, tracking how users respond to the new descriptions—whether sales increase or product pages see more traffic—can help evaluate the effectiveness of the LLM integration.

Continuous Improvement

Monitoring is not a one-time task but an ongoing process. To maintain optimal product performance, companies need to engage in continuous testing and updates. This can involve retraining the model with new data, adjusting parameters to improve accuracy, or incorporating new features based on user feedback. Regular communication with the LLM vendor is also crucial to ensure the latest updates and improvements are integrated into the system.

By maintaining a consistent cycle of testing, feedback collection, and updates, companies can ensure that their LLM product remains relevant, efficient, and capable of delivering value over time.

Continuous improvement also means addressing any emerging issues quickly and effectively. For example, if users report errors or limitations in the model’s outputs, working closely with the LLM vendor to diagnose and solve the problem ensures that the product continues to function optimally.

In conclusion, deploying and monitoring an LLM product requires careful planning and execution. By utilizing the right deployment methods, conducting thorough quality assurance, and continuously tracking performance and feedback, companies can successfully integrate LLM products into their workflows and drive meaningful results.

In the rapidly evolving landscape of AI and generative models, product lifecycle management for software development for LLM-based products plays a crucial role in ensuring the success and sustainability of these technologies. Effective LLM product development requires a deep understanding of the unique challenges and opportunities that large language models present. From meticulous planning during the preparation stage to precise execution in the building phase, each step in the product lifecycle is critical for achieving desired outcomes.

The integration of product lifecycle management (PLM) ensures that every phase, from concept to deployment, is strategically managed, reducing risks and maximizing performance. This approach not only aids in delivering a high-quality LLM product but also ensures that the product evolves with user feedback and market demands. With PLM, companies can efficiently navigate the complexities of LLM-based products and make informed decisions throughout the development cycle.

Continuous adaptation is vital in the fast-paced world of LLM applications. The ability to refine and improve the model post-deployment, based on real-world performance metrics and user feedback, is what sets successful products apart. Ongoing monitoring and iteration, coupled with proactive communication with LLM vendors, allow for seamless updates and enhancements, ensuring that the product remains competitive and relevant.

In conclusion, product lifecycle management for software development for LLM-based products is not a one-time effort but a continuous process of learning and improvement. By embracing this dynamic approach, businesses can unlock the full potential of LLM technologies, driving innovation and long-term success in the AI-driven marketplace.

News -Exploring Custom Software Development Green Bay A Guide to the Top Local Companies

Maximizing Efficiency with Co-Development Software A Comprehensive Guide

The Fallout of Anjali Arora’s MMS Viral Video A Comprehensive Analysis

Unveiling the Impact of Desi Viral MMS Videos on Indian Society

Exploring the Impact of Brazzers’ Viral Videos on Modern Advertising

The Aftermath of the Aman Ramgarhia Viral Video A Discussion on Privacy and Digital Responsibility

Controversy Unfolds Edward Teach Brewery Viral Video Sparks Widespread Reactions